DoorDash's Game-Changing Strategy: 70% Hit Ratio in Cache Optimization!

Decentralization and Empowering Efficiency

TLDR;

Problem: Managing large and costly Redis clusters for the feature store, which are expensive to maintain and require rapid online prediction requests, necessitates exploring an in-process caching layer to enhance performance and scalability, especially for replica requests.

Solution: In order to maximize request efficiency and reduce dependency on Redis clusters, the solution proposes incorporating an in-process caching layer within microservices, hence improving system speed and scalability.

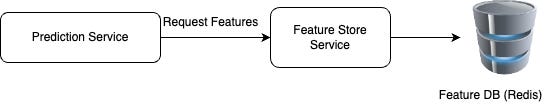

Flow

Based on the features, the DoorDash Prediction Service (SPS) forecasts the outcomes. SPS requests a feature from the feature store if it is not offered by the upstream service.

The feature store, mainly housed in Redis, incurs substantial compute costs due to high request volumes for ML features.

DoorDash explores caching and alternative storage solutions to address scalability and cost efficiency concerns.

Implementation of caching is expected to boost prediction performance, reliability, and scalability.

Despite potential immediate gains, caching promises to reduce compute costs and enhance platform efficiency over time.