How a 43-Second Network Issue Led to a 24-Hour GitHub Degradation

A Deep Dive into MySQL Failures, Replication Lag, and Recovery Bottlenecks

On October 21 2018, GitHub experienced a 24-hour service degradation that impacted multiple systems, leaving users with outdated and inconsistent data.

The root cause? A routine network maintenance triggered an unexpected failover, forcing GitHub’s database topology into an unrecoverable state. While no user data was permanently lost, manual reconciliation for missing transactions is still ongoing.

Let’s break it down.

🚀 TL;DR

GitHub suffered a critical MySQL failure when network connectivity between data centers dropped for just 43 seconds. This triggered:

✅ An unintended MySQL failover, shifting traffic to a secondary region.

✅ Replication issues, where recent writes were lost between data centers.

✅ Delayed recovery, due to slow MySQL restores and manual reconciliation.

Here’s what we’ll cover:

1️⃣ 🛠️ What Happened? (The chain reaction that broke GitHub's data pipeline)

2️⃣ 🛑 Root Causes (Why the failover led to major inconsistencies)

3️⃣ 🤔 Lessons Learned (How GitHub is changing its failover strategy)

4️⃣ 🏗️ How to Prevent This in Your Own Systems (Paid subscribers get exclusive best practices)

🔍 Background: How GitHub Manages Databases at Scale

At the time, GitHub’s infrastructure ran on multiple MySQL clusters, each ranging from hundreds of gigabytes to nearly five terabytes. These clusters handle non-Git metadata, everything from pull requests and issues to authentication and background processing.

To optimize read-heavy workloads, GitHub employs dozens of read replicas per cluster, reducing the load on primary databases. Writes are directed to the relevant primary for each cluster, while most read requests are offloaded to replicas to keep performance high.

🔹 Database Scaling with Functional Sharding

Different parts of GitHub’s platform use functional sharding, meaning data isn’t stored in a single monolithic database. Instead, clusters are partitioned by function, some store repository metadata, others handle user authentication, and so on.

🔹 Automated Failover with MySQL Orchestrator

To maintain high availability, GitHub relies on Orchestrator, a MySQL failover tool that automates primary election and topology changes. Built on Raft consensus, Orchestrator monitors cluster health and automatically promotes a new primary when failures occur.

But here’s the catch: Orchestrator doesn’t always make topology changes that align with application needs. If a failover occurs too aggressively or across regions, it can introduce data inconsistencies and latency spikes, which is exactly what happened during the October 21 incident.

In short, GitHub’s MySQL setup is built for scale, but failover logic needs to be carefully tuned to avoid unexpected issues.

🛠️ What Happened?

It started as a routine hardware swap.

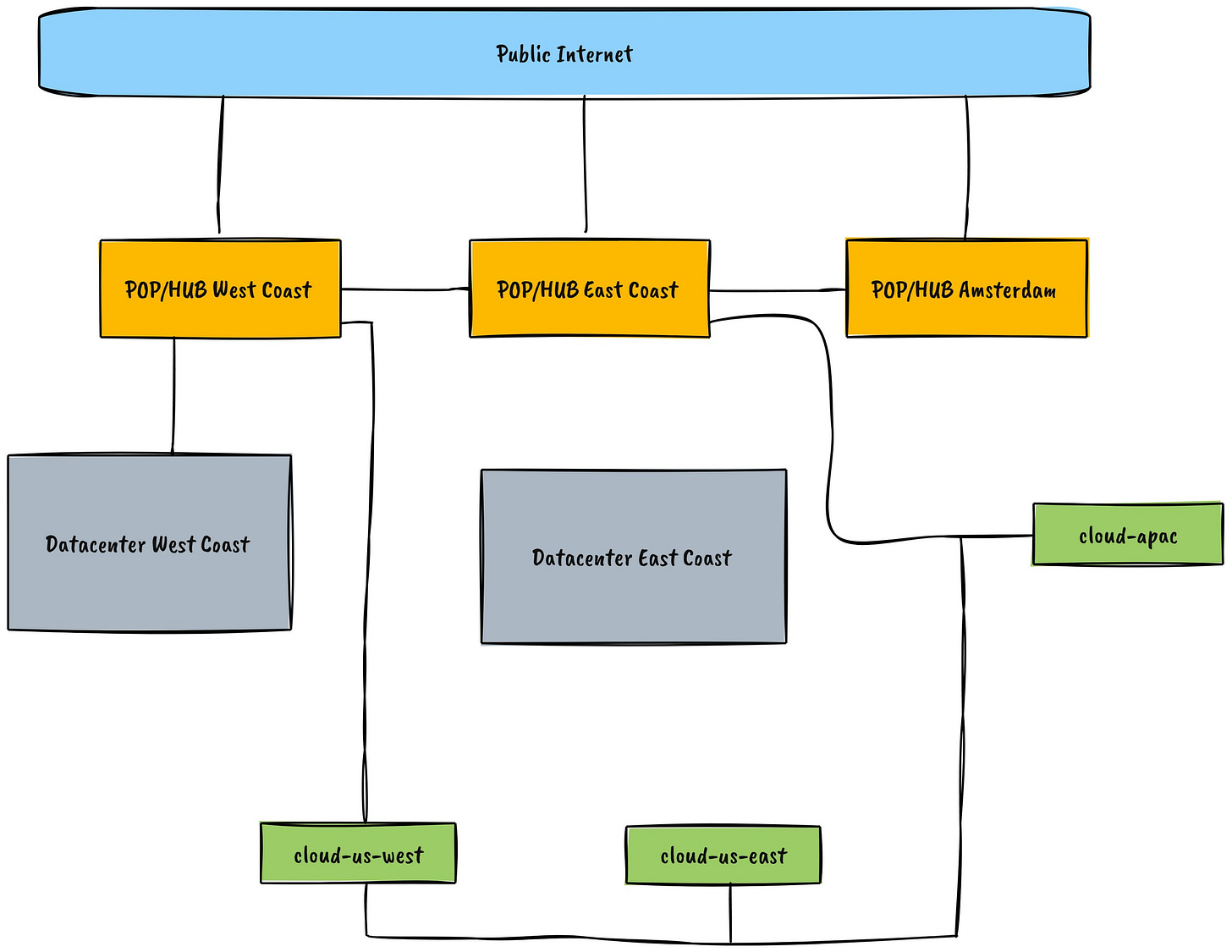

At 22:52 UTC, GitHub replaced a failing 100G optical link between their East Coast data center and their network hub. The maintenance window was expected to be uneventful.

🚨 The problem: Connectivity dropped for 43 seconds, and that was enough to trigger an unintended failover.

GitHub’s MySQL Orchestrator, which manages database topologies, detected the network issue and promoted a secondary database cluster in the West Coast data center.

When connectivity was restored, GitHub’s application layer resumed writing to the new primaries, unaware that:

Some transactions on the old primary were never replicated.

The newly promoted database cluster had missing data.

At this point, two separate MySQL clusters contained conflicting writes—and there was no way to merge them cleanly.

Result: GitHub had to manually reconcile missing data, causing service degradation for over 24 hours.

🛑 Root Causes

1️⃣ Unintentional Cross-Region Failover

MySQL Orchestrator elected new primaries in the wrong region, introducing latency and replication gaps.

2️⃣ Replication Lag Introduced Data Loss

Writes that occurred during the failover window never reached the secondary region, forcing manual data reconciliation.

3️⃣ Slow Backup Restoration Delayed Recovery

Even though backups existed, they were stored remotely and took hours to restore due to data transfer bottlenecks.

4️⃣ GitHub’s Status System Wasn’t Granular Enough

Their red/yellow/green system didn’t show which services were degraded, leading to confusion for users.

🤔 Lessons Learned

This incident reveals some critical best practices for handling distributed database failovers:

1️⃣ Failover Should Consider Data Consistency, Not Just Availability

Failing over to a region with incomplete data created more problems than it solved.

2️⃣ Replication ≠ Backups

A secondary region isn’t a true backup if replication can introduce inconsistencies.

3️⃣ Failover Testing Needs to Simulate Real-World Network Drops

GitHub had never tested failover scenarios where writes were missing across regions.

4️⃣ Disaster Recovery Must Be Fast, Not Just Available

Having a backup isn’t enough—how fast can you restore it?