How Instacart Scales Real-Time Inventory Predictions Across 80,000 Stores

Here’s a dirty secret of on-demand commerce: nobody knows the real inventory state of a grocery store. Not the retailer, not the associate, definitely not you.

Instacart’s entire business depends on making that unknowable world feel predictable.

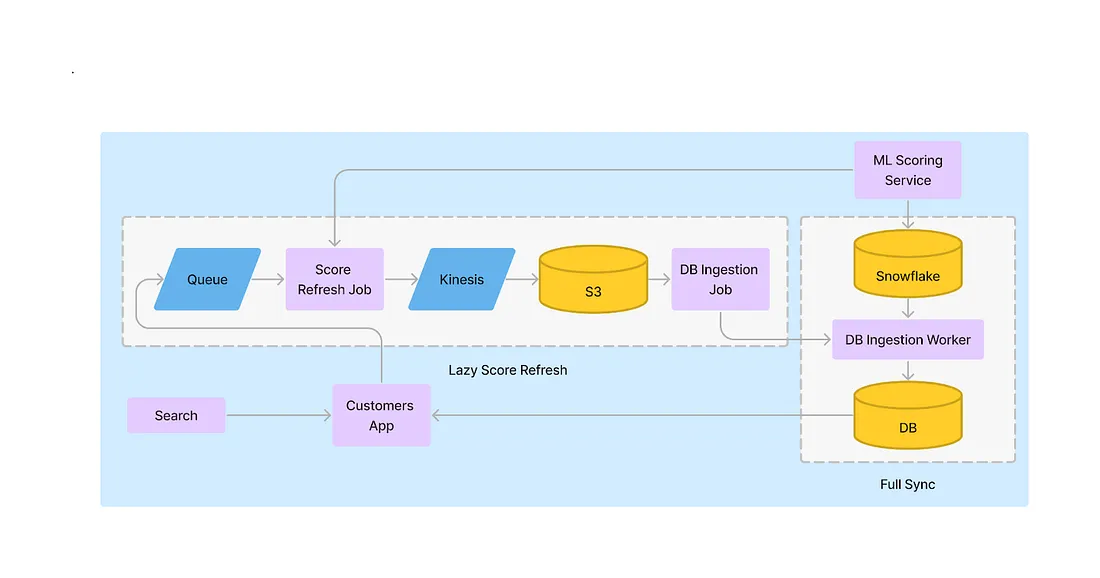

This edition breaks down the engineering architecture Instacart built to simulate a consistent live inventory model across hundreds of millions of items, using a combination of model-driven scoring, lazy refresh pipelines, multi-model experimentation, and a threshold-tuning system that looks more like an F1 control panel than a grocery app.

This is one of those systems where every layer exists because something simpler exploded.

🧠 The Core Problem

Instacart needs to answer one question—fast, correctly, and millions of times per minute:

“If we show this item to a user, how likely is it actually in stock at this specific store… right now?”

This prediction drives:

Search ranking

Product filtering

Shopper routing

Customer trust (“Don’t show me milk if the store is out of milk again”)

The output is a score, a real-time availability probability that feeds downstream systems.

The challenge:

Hundreds of millions of items

80K+ store locations

Score drift happens fast

ML model updates happen constantly

Retrieval systems need bulk reads with low latency

UI surfaces require high consistency

You can’t RPC your way out of this one.

⚙️ Real-Time Scoring, but at Scale

Instacart receives ML scores from a Real-Time Availability model. But calling the scoring API during search retrieval would have been slower than shopping in real life.

So they introduced two ingestion pipelines to push model outputs into the database ahead of time:

1. Full Sync (Snowflake → DB)

ML team writes new scores into a Snowflake table multiple times a day

Ingestion workers upsert those scores into the serving DB

Ensures consistency, especially for long-tail items that rarely get queried

This guarantees freshness, but doing a full sync on hundreds of millions of items is expensive—both financially and operationally.

2. Lazy Refresh (Triggered by Search Results)

Keep reading with a 7-day free trial

Subscribe to Byte-Sized Design to keep reading this post and get 7 days of free access to the full post archives.