What OpenAI Understood About Postgres That Most Teams Ignore

How One Postgres Instance Powers 800 Million ChatGPT Users

Every infrastructure architect on the planet will tell you the same thing: single-primary Postgres dies around 10 million users. Maybe 20 million if you’re really good.

OpenAI is at 800 million.

One primary database. 50 read replicas. Millions of queries per second.

And it just keeps working.

They Broke Every Rule We Have About Database Scaling

When ChatGPT launched and traffic went vertical, the playbook said: start sharding, migrate to Cassandra, or pray.

OpenAI looked at that playbook and said “nah.”

Here’s what they noticed: 95% of their traffic is reads. Updates happen, sure. But the overwhelming majority of requests are just fetching data.

Everyone panics about Postgres not scaling. But that’s mostly about writes. Nobody’s really pushed the boundaries on reads with a single writer.

Turns out you can go way, way further than anyone thought.

One Azure Postgres instance handling all writes. Nearly 50 replicas spread across regions handling reads. Double-digit millisecond p99 latency. Five nines uptime.

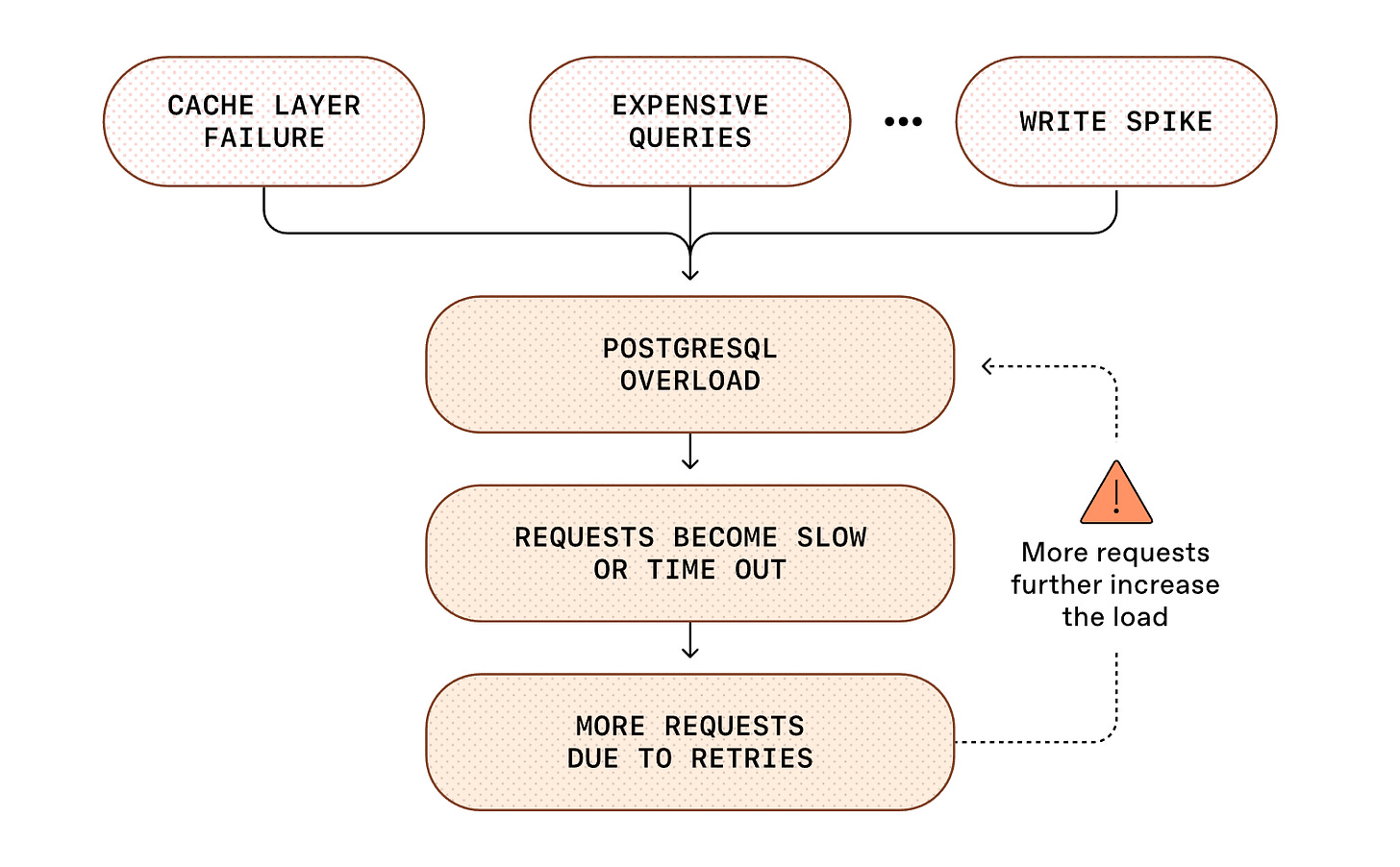

In the last 12 months? One SEV-0 incident. And that was during the ImageGen launch when 100 million people signed up in a week and writes spiked 10x overnight.

Write Traffic Is Where Postgres Falls Apart

Postgres uses something called MVCC. When you update a row, it doesn’t change it in place. It creates a whole new version and marks the old one as dead.

Update a user’s email? New row version. Update it again? Another new version.

All those dead versions sit there until autovacuum cleans them up. And under heavy write load:

Every update copies the entire row (write amplification)

Reads have to scan past dead versions to find the current one (read amplification)

Tables bloat

Indexes bloat

Autovacuum can’t keep up

This is why people say Postgres doesn’t scale. They’re hammering it with writes and hitting a ceiling.

OpenAI just stopped fighting that fight.