How PayPal Slashed Their Big Data Pipeline Costs by 70% with GPUs

Leveraging Spark 3 and RAPIDS Accelerator to Slash Costs

🚀 TL;DR

Big data can be a resource-hungry beast, and when you’re running an operation as massive as PayPal’s, the stakes are even higher. With 430+ million active users and processing 25+ billion transactions daily, PayPal’s infrastructure handles an unparalleled scale of data. Machine learning powers nearly every aspect of their business, whether it’s fraud detection, recommendations, risk assessment, credit underwriting, or customer support. This level of data processing and ML workload requires a solution that is not only scalable but also cost-efficient.

Now, you might be thinking, “Switching to GPUs and saving money? But I thought GPUs are expensive!” And you’d be right to think that on the surface, GPUs often come with a hefty price tag. But PayPal’s story shows how this upfront cost can be outweighed by efficiency gains. GPUs complete tasks significantly faster than CPUs, meaning fewer machines, less runtime, and reduced overall cloud spend. It’s not about whether GPUs are expensive, it’s about whether they save more than they cost. In PayPal’s case, the answer was a resounding yes.

They found their solution by combining Apache Spark 3 with NVIDIA GPUs through the RAPIDS Accelerator. By optimizing their Spark jobs to leverage the parallel processing capabilities of GPUs, they drastically reduced runtimes and consolidated infrastructure. The results? Up to 70% savings on cloud costs, faster job completion times, and a more streamlined pipeline for their machine learning and big data needs.

Let’s walk through PayPal’s journey, the technical challenges they overcame, and actionable insights you can apply to your own systems. Let’s dive in! 🚀

What Will We Dive Into Today? 📖

What is Spark RAPIDS?

Why GPUs Make the Difference

When Does Using GPUs Make Sense?

PayPal's 70% Savings

Challenges and Lessons Learned

Insights & Official Resources (Paid)

Understanding the RAPIDS Accelerator

Paypal’s Strategy

When GPUs can be Cheaper

Where GPUs Excel

Why Some Teams Use CPUs Instead

CPUs, GPUs, and Specialized AI Chips

When GPUs won’t Save Money

What is Spark RAPIDS? 🖥️

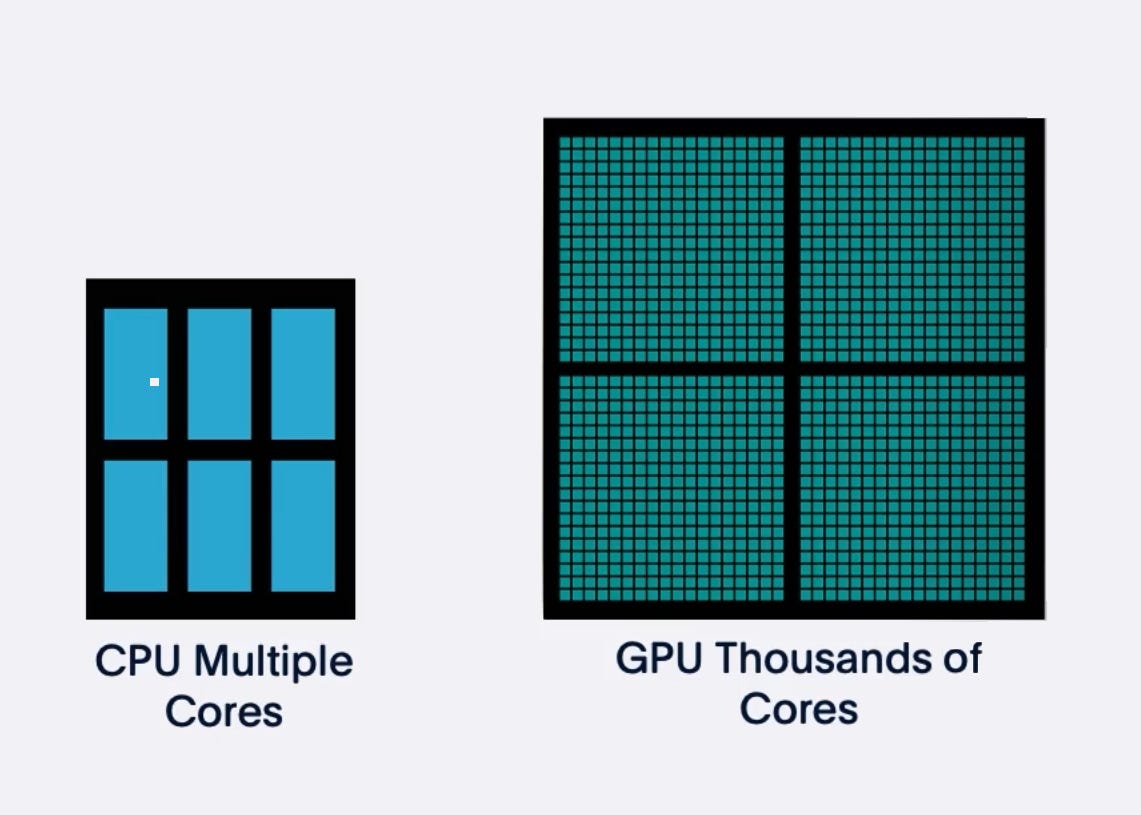

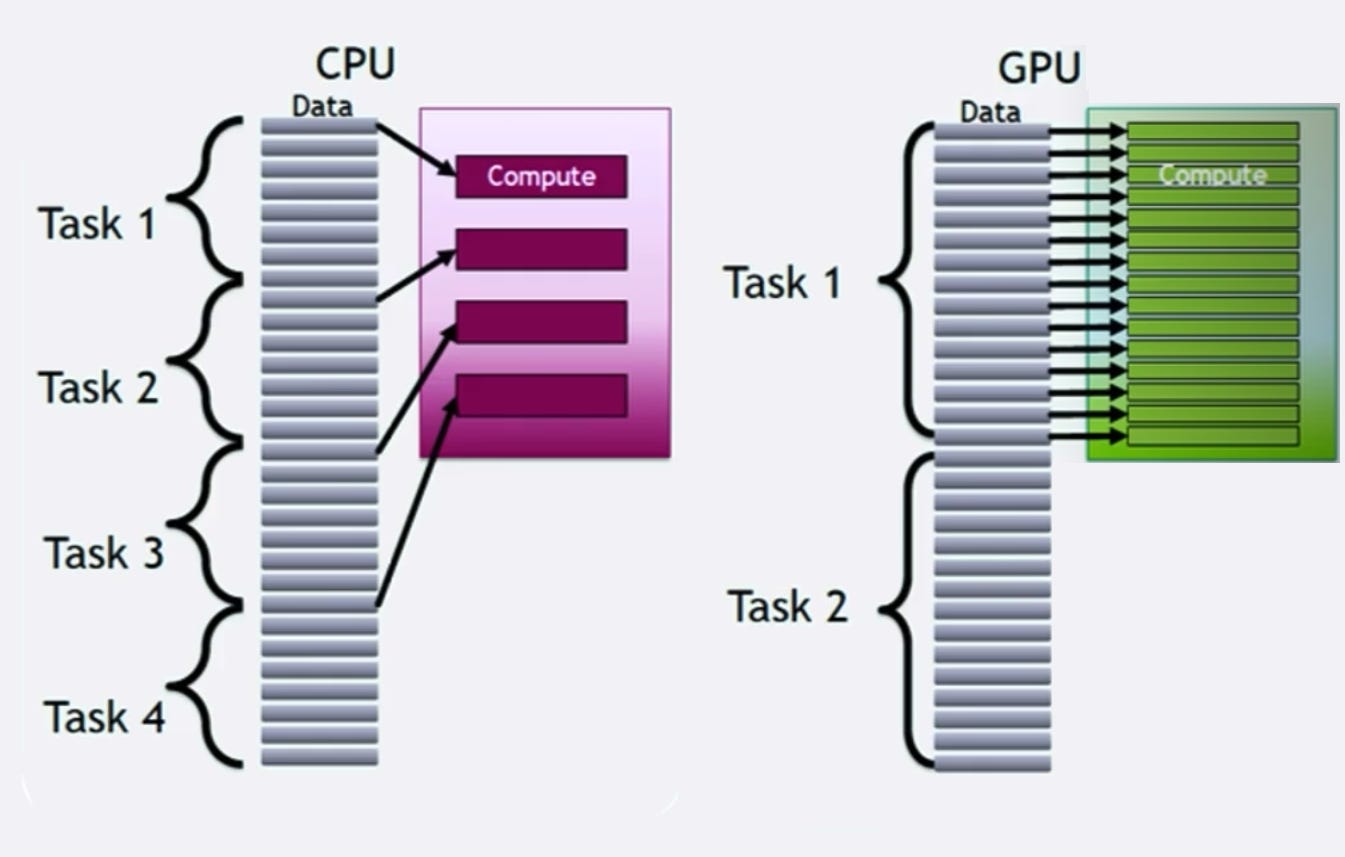

Imagine if your Spark jobs traded their trusty CPU “workhorse” for a fleet of GPU “supercars.” That’s essentially what Spark RAPIDS does. Developed by NVIDIA, it allows Spark to offload heavy computational tasks like joins, group-bys, and sorts onto GPUs, which are naturally better at parallel processing.

GPUs aren’t just faster, they’re smarter. Where CPUs have a handful of strong cores working on tasks one at a time, GPUs have far more smaller cores tackling everything simultaneously. This is perfect for big data, where the workload is massive, repetitive, and computationally intensive.

Why GPUs Make the Difference 🎮

Let’s be real: CPUs can be versatile, but they struggle when your dataset balloons into the terabytes. GPUs thrive here. They’re designed for high-throughput workloads, making them the perfect partner for Spark RAPIDS.

Think of GPUs as marathon runners with rocket boosters, they process data faster with less strain on your infrastructure. Add the fact that GPUs reduce the number of machines you need, and suddenly you’re saving money while speeding up workflows all at the same time.

But not every workload is GPU-friendly, which brings us to the next point.

So When Does This Make Sense? 🤔

To unlock the full potential of Spark RAPIDS, your workloads need to check a few key boxes. First, they must involve massive datasets—think multi-terabyte or petabyte scale. If your job processes only gigabytes, GPUs might not provide enough value to justify the investment.

Second, your workload needs to lean heavily on computational operations like joins, sorts, or aggregations. These are the tasks GPUs eat for breakfast. Finally, you need to run Spark 3 or later—earlier versions don’t support the GPU magic Spark RAPIDS brings to the table.

It’s also important to note that GPU-friendly jobs require larger partition sizes (1GB–2GB), as GPUs work best when given chunky data to process. If you’re used to the default Spark partitions of 128MB, this shift might feel strange, but it’s worth it.

How Paypal Saved 70% 💡

At PayPal, the stakes were high. Their systems run millions of Spark jobs daily, processing petabytes of data. The costs? Astronomical. They needed a solution, fast. The journey began with a simple question: Could GPUs take the load off their CPU-based Spark clusters? Initial experiments were promising, so they made the leap, migrating their workloads to Spark 3 with NVIDIA’s RAPIDS Accelerator.

The first step was enabling Adaptive Query Execution (AQE), Spark 3’s superpower for optimizing partitions and shuffle stages. AQE allowed them to dynamically resize partitions based on the workload, which was crucial for GPU performance.

Next came the hardware upgrade. They replaced 140 CPU machines with just 30 GPU machines, each equipped with Tesla T4 GPUs. With GPUs handling most of the heavy lifting, they cut down on cloud infrastructure without compromising performance.

But the real magic happened in the details. By tweaking parameters like spark.sql.files.maxPartitionBytes (to create larger partitions) and fine-tuning GPU concurrency settings, they unlocked even greater efficiencies.

The result? A 45% cost reduction just from smarter partitioning and AQE tuning. Once GPUs were fully operational, savings soared to 70%. A job that used to cost $1,000 now ran for just $300.

Hardware Selection: NVIDIA Tesla T4 GPUs

A pivotal aspect of PayPal's strategy was the selection of NVIDIA Tesla T4 GPUs. These GPUs offer a balance between performance and energy efficiency, making them suitable for large-scale data processing tasks. The Tesla T4's multi-precision capabilities and low power consumption contributed to the overall efficiency of PayPal's data processing pipelines.

Performance and Cost Benefits

By integrating the RAPIDS Accelerator and Tesla T4 GPUs, PayPal experienced:

Accelerated Data Processing: Tasks that previously took hours were completed in minutes, enhancing overall productivity.

Reduced Operational Costs: The efficiency of GPU processing led to lower cloud resource consumption, resulting in substantial cost savings.

Scalability: The solution provided the flexibility to scale operations without a proportional increase in costs.

Challenges and Lessons Learned 🧗♂️

This transformation wasn’t without its hiccups. One major challenge was GPU memory management. GPUs have less memory than CPUs, which can lead to spillovers onto local SSDs. PayPal tackled this by adding extra SSDs to their machines and carefully adjusting the spark.rapids.memory.pinnedPool.size parameter.

Another hurdle was concurrency. Initially, they tried running too many tasks on each GPU, which caused bottlenecks. By limiting the number of concurrent GPU tasks to two, they struck a balance between performance and stability.

What Can You Learn From This? 🛠️

So, how can you apply PayPal’s success to your own systems? Start by analyzing your Spark jobs. Are they computationally heavy? Do they process huge datasets? If so, they’re prime candidates for GPU acceleration.

Next, focus on partition size. GPUs work best with large partitions, so consider adjusting spark.sql.files.maxPartitionBytes and enabling AQE. Finally, monitor your GPU utilization. Use tools to track how efficiently your GPUs are being used and tweak settings like spark.rapids.sql.concurrentGpuTasks for optimal performance.