How Snap Cut Compute Costs by 65% and Got Faster Doing It

The architecture overhaul that slashed costs and boosted engineering speed

Sponsor - The first newsletter for product engineers

Product for Engineers is PostHog’s newsletter dedicated to helping engineers improve their product skills. It features curated advice on building great products, lessons (and mistakes) from building PostHog, and research into the practices of top startups.

🚀 TL;DR

Snap transitioned from a monolith running in Google App Engine to a distributed service mesh across AWS and GCP, powered by Kubernetes and Envoy. This move cut compute costs by 65%, boosted reliability, and reduced latency. But scaling infrastructure isn’t as easy as sprinkling Kubernetes on VMs. It took careful design, internal tooling, and a few hard lessons along the way.

📌 The Impact: What Changed

🔹 Compute cost down by 65%

🔹 Latency down across the board

🔹 Higher reliability for Snapchatters

🔹 Easier multi-cloud support and service ownership

Snap moved fast, but not recklessly. This wasn’t a migration, it was a reinvention.

🔍 What Was the Problem?

Snap’s old architecture was a monolith running inside Google App Engine. That worked, until it didn’t.

❌ Engineers couldn’t own pieces of the system

❌ Shared datastores made decoupling a nightmare

❌ Cross-team dependencies slowed everyone down

❌ Moving workloads between cloud providers? Basically impossible

A monolith got Snap to scale. But it couldn’t take them any further.

🧠 Root Cause

The problem wasn’t performance, it was velocity. Engineers wanted to ship independently, own their services, and reduce the blast radius during outages.

To fix this, Snap pivoted to a service-oriented architecture: independent, composable microservices connected via secure, observable communication.

Now this is not to say that microservices are the only way to solve these problems, but for Snap it made sense. If you’re interested in other options read here:

But how do you build a universe of services without throwing developers into a pit of YAML and toil?

🔧 How Snap Pulled It Off

1️⃣ Build Infrastructure as Leverage

Snap didn’t want every team solving auth, metrics, and deployments over and over. So they abstracted it.

Design principles:

Secure by default

Abstract cloud differences

Centralized service discovery

Low-friction service creation

Clear separation of business logic vs. infrastructure

2️⃣ Adopt Proven Tools, Don’t Reinvent

Instead of building everything in-house, Snap leaned into open source:

✔️ Kubernetes for orchestration

✔️ Envoy for service-to-service communication

✔️ Docker for containerization

✔️ Spinnaker for deployments

These tools gave Snap the building blocks. What came next was stitching them together with opinionated internal systems.

🕸️ Hello, Service Mesh

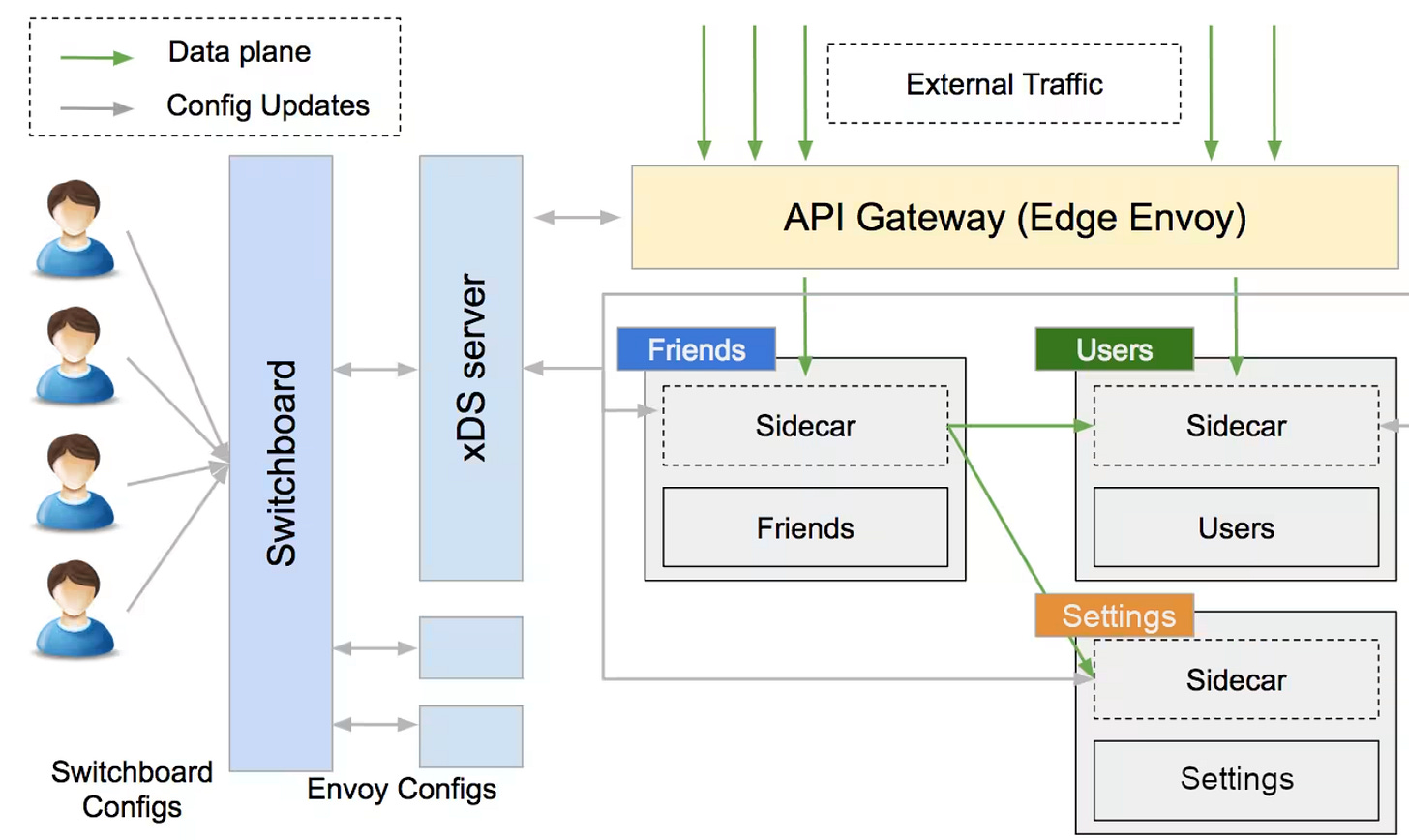

Snap’s architecture now uses an Envoy-based Service Mesh.

Every microservice runs with an Envoy sidecar. All network traffic—ingress and egress—flows through Envoy. That means:

🔐 TLS by default

📈 Rich telemetry

💥 Circuit-breaking and retries

📡 Central config management via xDS

This enables fine-grained traffic control, observability, and consistent security across all services and environments.

📟 Meet Switchboard: Snap’s Control Plane

Switchboard is the internal web UI for managing all services, configs, and traffic policies. Think of it as the mission control for Snap's mesh.

Through Switchboard, service owners can:

Create services and clusters

Shift traffic between regions

Manage dependencies

Roll out and rollback safely

It abstracts Envoy’s full API, replacing it with a simple, service-centric config model. Changes go into DynamoDB, then get expanded into full Envoy configs and rolled out through Snap’s custom xDS control plane.

Deployments? Also simplified. Switchboard hooks into Spinnaker to auto-generate pipelines with canaries, health checks, and zonal rollouts.

🌐 Snap’s Private Network + API Gateway

Snap's services run inside a private, regional network. Only one system touches the public Internet: Snap’s API Gateway.

It’s Envoy, too—running custom filters for Snapchat’s mobile auth, rate limiting, and load shedding.

Once requests pass through the filter chain, they’re routed internally to the right service via the Mesh. This minimizes public exposure and tightens Snap’s security posture.

🤔 Lessons Learned

Keep reading with a 7-day free trial

Subscribe to Byte-Sized Design to keep reading this post and get 7 days of free access to the full post archives.