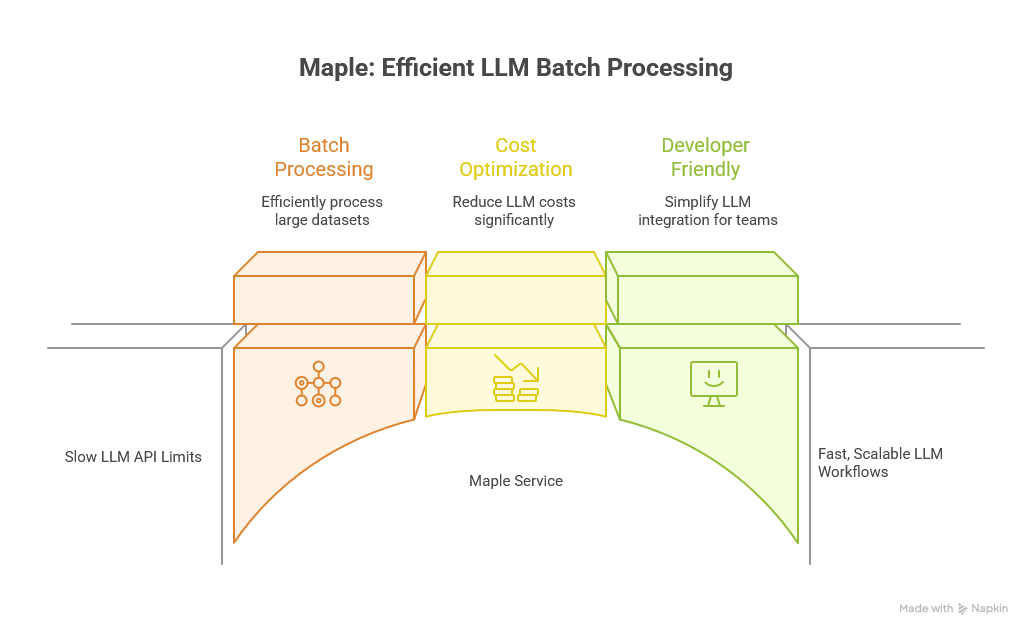

Scaling LLM Superpowers Across Instacart with Maple

How Instacart built a robust, cost-effective, and developer-friendly platform to power large-scale LLM processing across the company.

At Instacart, the engineering teams are harnessing the power of large language models (LLMs) to tackle a wide range of critical challenges from cleaning up catalog data to enhancing search relevance. However, the sheer scale of these LLM-powered workflows, often requiring millions of prompts, quickly exposed the limitations of relying on real-time LLM provider APIs.

TLDR

To address this, Instacart built Maple - a service that makes large-scale LLM batch processing fast, cost-effective (saving up to 50% on LLM costs), and developer-friendly. Maple abstracts away the complexities of batching, encoding, file management, and retries, enabling teams across the company to leverage LLMs at scale without needing to reinvent the infrastructure.

Why this matters

As Instacart's use of LLMs expanded across critical domains like catalog enrichment, fulfillment, and search, the engineering teams faced growing pains. Real-time LLM APIs were frequently rate-limited, causing delays and making it difficult to keep up with demand. Additionally, multiple teams were independently building similar batch processing pipelines, leading to duplicated effort and fragmented solutions.

Maple: Powering Large-Scale LLM Workflows

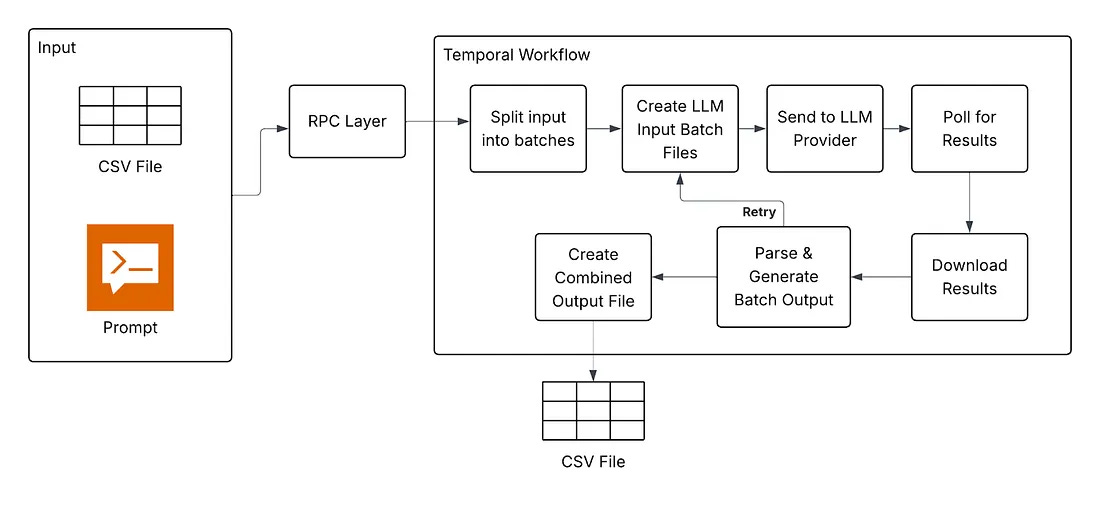

1. Streamlining the Batch Processing Workflow

Maple automates the entire batch processing workflow, handling tasks like:

Batching: Splitting large input files into smaller batches

Encoding/Decoding: Automating conversions to and from the LLM batch file format

File Management: Automating input uploads, job monitoring, and result downloads

Retries: Ensuring failed tasks are retried automatically for consistent outputs

Cost Tracking: Tracking detailed cost usage for each team

By managing these steps, Maple eliminates the need for teams to write custom batch processing code, significantly enhancing productivity.

2. Architecting for Scalability and Fault Tolerance

Keep reading with a 7-day free trial

Subscribe to Byte-Sized Design to keep reading this post and get 7 days of free access to the full post archives.