System Design for Everyone: A Primer on Caching Strategies

When, Why, and How to Use Them Effectively

🚀 TL;DR

Imagine your service is taking off, users are flocking in, and everything’s running smoothly until your database starts to choke. Queries slow down, page loads crawl, and users start leaving. This is where caching comes in. A well-placed cache can transform your system, reducing latency, offloading your backend, and keeping costs in check.

In this write up, we’ll dive into the various caching strategies, exploring when to use them, their unique advantages, and the trade-offs they come with. Let’s break down how each approach works and identify the pros and cons to help you choose the right one for your needs.

So, What Are the Requirements? 🤔

Caching plays a vital role in systems where:

Performance is key: When users demand lightning-fast responses (think social media apps or e-commerce platforms), caching speeds up data delivery compared to hitting the database every time.

Reads outnumber writes: In read-heavy scenarios like product catalogs or user profiles, caching can significantly reduce the load on your database.

Costs need managing: Serving data from a cache is far cheaper than running repetitive database queries or expensive computations.

But caching isn’t a one-size-fits-all solution. For rapidly changing data or write-heavy workloads, the risks of stale data and inconsistency might outweigh the benefits. Always weigh the trade-offs before implementing a caching strategy.

There are also different strategies for reading from and writing to a cache, each tailored to specific use cases. Understanding these approaches will help you choose the one that fits your system’s needs. Let’s explore them!

📋 Cache Write Strategies

💾 Write-Through Cache

The write-through caching strategy ensures that data stays consistent between your cache and database. Here's how it works: when data is written or updated, the cache handles it first and then immediately writes the same data to the database. Think of the cache as a middle layer sitting between your application and the database, making sure both stay synchronized.

How Write-Through Works

Let’s break it down step by step:

Write request: The application sends a request to update or add data.

Cache update: The cache processes this request and stores the new data.

Database update: The cache then writes the same data to the database.

Acknowledgment: Only after the database write is successful does the system acknowledge the write request back to the application.

At the end of this process, both the cache and the database have the exact same value, ensuring consistency. This synchronous approach means that the cache always mirrors the state of the database.

Why Use Write-Through Caching?

Write-through caching might sound like an extra step, and it is, this strategy introduces some latency since every write involves two operations: one to the cache and another to the database. But the payoff is big. You can skip the hassle of managing cache invalidation. When every database write flows through the cache, you never have to worry about stale data.

Common Use Cases

Systems prioritizing data consistency: Write-through caching is ideal for applications where having up-to-date data in the cache is critical, such as financial systems or e-commerce inventory tracking.

Frequent read-heavy workloads: Pairing write-through caching with read-through caching ensures both fast reads and consistent writes.

Pros:

Data consistency: The cache is always in sync with the database.

Simplified logic: No need for complex cache invalidation strategies.

Cons:

Write latency: Every write operation takes longer since it involves both the cache and the database.

Increased database load: Even though the cache handles reads efficiently, every write still hits the database.

In summary, write-through caching strikes a balance between consistency and simplicity. While it’s not the fastest option for write-heavy workloads, its guarantee of data integrity makes it a reliable choice for many applications. Pair it with a read-through cache to get the best of both worlds: consistent writes and blazing-fast reads.

Write-Around Cache

The write-around caching strategy takes a different approach to handling data writes compared to write-through caching. In this method, data is written directly to the database, skipping the cache entirely. The cache only gets involved when data is requested during a read operation.

This strategy works best when the cache is primarily used to speed up frequent reads, rather than to manage data consistency during writes. By keeping the cache focused on popular data, it avoids unnecessary writes to the cache for data that may not be read often.

How Write-Around Works

Here’s what happens step by step:

Write request: The application writes new or updated data directly to the database. The cache is bypassed during this step.

Read request (cache miss): If the application requests the data that was just written, and the cache doesn’t have it, this results in a cache miss.

Fetch from the database: The cache retrieves the data from the database and stores it for future requests.

Read request (cache hit): For subsequent requests, the data is retrieved directly from the cache, making reads much faster.

By skipping writes to the cache, write-around avoids overloading the cache with rarely used data, keeping it lean and focused on frequently accessed items.

Why Use Write-Around Caching?

Write-around caching is ideal when your application has a read-heavy workload but only occasionally needs to access recently written data. It avoids wasting cache space on data that isn’t frequently accessed while still providing the benefits of quick reads for commonly used information.

Common Use Cases

Applications with infrequent reads for new data: In systems where newly written data is not immediately read (e.g., logging systems or archival storage), write-around is a good fit.

Optimized cache usage: For applications with limited cache capacity, this strategy ensures that only frequently read data occupies cache memory.

Pros:

Reduced cache load: The cache only stores frequently accessed data, avoiding unnecessary writes.

Efficient use of memory: Cache space is reserved for popular data, maximizing its impact on read performance.

Cons:

Potential cache misses: Recently written data may not be available in the cache if it’s requested shortly after being written.

Read latency: Cache misses for recently written data require fetching from the database, which can be slower.

In summary, write-around caching shines when your goal is to optimize the cache for reads, especially in scenarios where new data isn’t read immediately. While it introduces a risk of cache misses for freshly written data, it’s a practical choice for read-heavy workloads where cache efficiency matters more than immediate consistency.

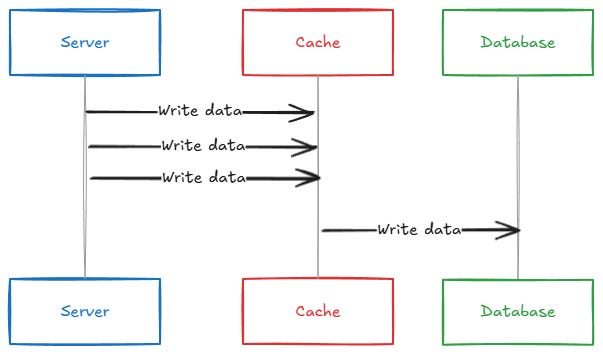

Write-Back Cache

The write-back caching strategy prioritizes speed and efficiency for write operations. In this approach, data is written to the cache first and marked as "dirty," meaning it hasn’t yet been written to the database. The database is updated later, either in batches or asynchronously, ensuring high write performance at the potential cost of immediate consistency.

This strategy is especially useful for write-heavy workloads where minimizing latency is a priority. However, it introduces complexity since there’s a risk of data loss if the cache fails before it syncs with the database.

How Write-Back Works

Here’s a step-by-step breakdown:

Write request: The application writes data to the cache. The database is not updated immediately.

Dirty data marker: The cache flags the new or updated data as "dirty," indicating that it still needs to be written to the database.

Deferred database update: At a later time, the cache writes the dirty data to the database, either when triggered by a threshold (e.g., number of writes) or during scheduled synchronization.

Acknowledgment: The application receives confirmation as soon as the data is written to the cache, without waiting for the database update.

By deferring database writes, this strategy minimizes the latency experienced by the application during writes.

Why Use Write-Back Caching?

Write-back caching is ideal for systems where write performance is critical, and occasional delays in database consistency are acceptable. It’s often used in scenarios where large volumes of data need to be written quickly, and immediate synchronization with the database isn’t necessary.

Common Use Cases

Write-heavy applications: Systems like analytics platforms or IoT devices generating massive write workloads benefit from the reduced latency.

Temporary data: Use cases where data may not need to persist in the database (e.g., session data) can benefit from write-back caching.

Pros:

Low write latency: Applications experience minimal delay because the cache handles writes first.

Efficient batching: Deferring writes allows the system to batch updates to the database, reducing overall load.

Cons:

Data consistency risk: If the cache fails before syncing with the database, dirty data may be lost.

Complexity: Implementing a reliable write-back mechanism requires careful handling of cache failures and synchronization logic.

Write-back caching is a high-performance option for applications with heavy write workloads. It offers low latency and efficient database interaction by deferring updates, but this comes with the trade-off of potential data loss and consistency challenges. For systems where speed matters most, write-back caching is a powerful tool—just be prepared to handle the risks that come with delayed writes.

💡Read Strategies

Cache-aside

The cache-aside strategy is one of the most common patterns used in caching. In this approach, the application is responsible for interacting with both the cache and the database. The cache acts as a "sidekick," storing frequently used data and reducing database load, but it doesn't automatically populate itself.

With cache-aside, the application decides when to fetch data from the cache, when to populate the cache, and when to update or invalidate it. This flexibility makes it powerful but also requires thoughtful implementation to avoid stale or missing data.

How Cache-Aside Works

Here’s a step-by-step breakdown of how the pattern functions during read and write operations:

Read:

Cache lookup: The application first checks if the requested data is in the cache.

Cache hit: If the data is in the cache, it is returned immediately, this is the fastest path.

Cache miss: If the data is not in the cache, the application fetches it from the database.

Cache population: The application stores the fetched data in the cache for future requests.

Why Use Cache-Aside Caching?

Cache-aside is ideal for applications with unpredictable or uneven access patterns. It ensures the cache only stores frequently accessed data, keeping the cache lean and avoiding unnecessary memory usage.

Common Use Cases

Read-heavy applications: Systems like recommendation engines or product detail pages, where the same data is repeatedly accessed, benefit from cache-aside.

Dynamic workloads: In scenarios where access patterns change frequently, cache-aside ensures that only relevant data stays in the cache.

Pros:

Flexibility: The application controls the cache, allowing for fine-tuned behavior.

Efficient memory use: Only frequently accessed data occupies cache space.

Simple invalidation: Developers can decide when to remove or update data in the cache.

Cons:

Cold starts: A cache miss requires fetching data from the database, which is slower.

Increased complexity: The application must handle cache management, including populating, invalidating, and updating it.

Cache-aside strikes a balance between performance and flexibility. By giving the application control over the cache, it can adapt to changing workloads and ensure efficient memory usage. However, it requires careful design to manage cache misses and invalidation effectively. It’s a great choice for most systems where reads are more frequent than writes, and maintaining control over caching behavior is critical.

Read-Through Cache

The read-through caching strategy simplifies data retrieval by putting the cache in charge of fetching data from the database. When an application requests data, it interacts only with the cache. If the cache doesn’t have the data (a cache miss), it automatically fetches it from the database, stores it, and then returns it to the application.

This strategy abstracts the complexity of cache management from the application, making it a popular choice for reducing latency and simplifying development.

How Read-Through Works

Let’s break it down step by step:

Read Operation:

Cache lookup: The application sends a request to the cache to retrieve data.

Cache hit: If the data exists in the cache, it is returned immediately, ensuring fast response times.

Cache miss: If the data is not in the cache, the cache fetches it from the database.

Cache population: After fetching the data, the cache stores it for future requests.

Return data: The cache returns the data to the application, completing the process.

Write Operation (Optional):

Although not a focus of read-through caching, some implementations include mechanisms to invalidate or update cached data during a write operation. This helps maintain consistency between the cache and the database.

Why Use Read-Through Caching?

Read-through caching is ideal for applications with predictable access patterns and high read-to-write ratios. It simplifies the caching process by making the cache responsible for fetching and storing data.

Common Use Cases

Read-heavy workloads: Applications like content delivery systems or analytics dashboards benefit from reduced database load and faster response times.

Transparent caching: When developers want the caching logic to stay behind the scenes, read-through is a natural fit.

Pros:

Simplicity: The application doesn’t need to manage cache population—it only interacts with the cache.

Improved performance: Frequent reads are served quickly from the cache, reducing latency.

Database load reduction: The cache absorbs most of the read traffic, lightening the load on the database.

Cons:

Cold starts: Cache misses still require a database query, which can slow down the first request for uncached data.

Potential consistency issues: If the cache isn’t updated during writes, stale data may be served.

Limited flexibility: The cache automatically decides what to store, giving the application less control over cache behavior.

Read-through caching takes the responsibility of fetching and managing data off the application, providing a clean and transparent caching mechanism. It’s particularly effective for read heavy workloads where performance and ease of implementation are key priorities. However, managing cache consistency and avoiding cold start penalties require thoughtful design. With its blend of simplicity and efficiency, read-through caching is a great choice for many systems.

📜 Too Long, Did Read

Caching is more than a performance optimization; it’s a balancing act. By understanding the types of caches, choosing the right strategies, and anticipating the challenges, you can design systems that are both fast and reliable.

Next time your app feels sluggish or your database starts to struggle, pause and ask: is caching the missing puzzle piece, or am I using the right components with the right strategy?

🔔Follow Us for More!

Want daily, byte-sized system design tips to level up your skills? Follow us on LinkedIn and Twitter for insights that make complex concepts simple and actionable!

🎉 SPONSOR US 🎉

Promote your product or service to over 30,000 tech professionals! Our newsletter connects you directly with software engineers in the industry building new things every day!

Secure Your Spot Now! Don’t miss your chance to reach this key audience. Email us at bytesizeddesigninfo@gmail.com to reserve your space today!

Or get in touch from our storefront on Passionfroot!

📄Official Resources

Keep reading with a 7-day free trial

Subscribe to Byte-Sized Design to keep reading this post and get 7 days of free access to the full post archives.