The ChatGPT Outage: What OpenAI’s Post-Mortem Revealed 🛠️

How OpenAI addressed a system-wide outage that disrupted services for millions of users.

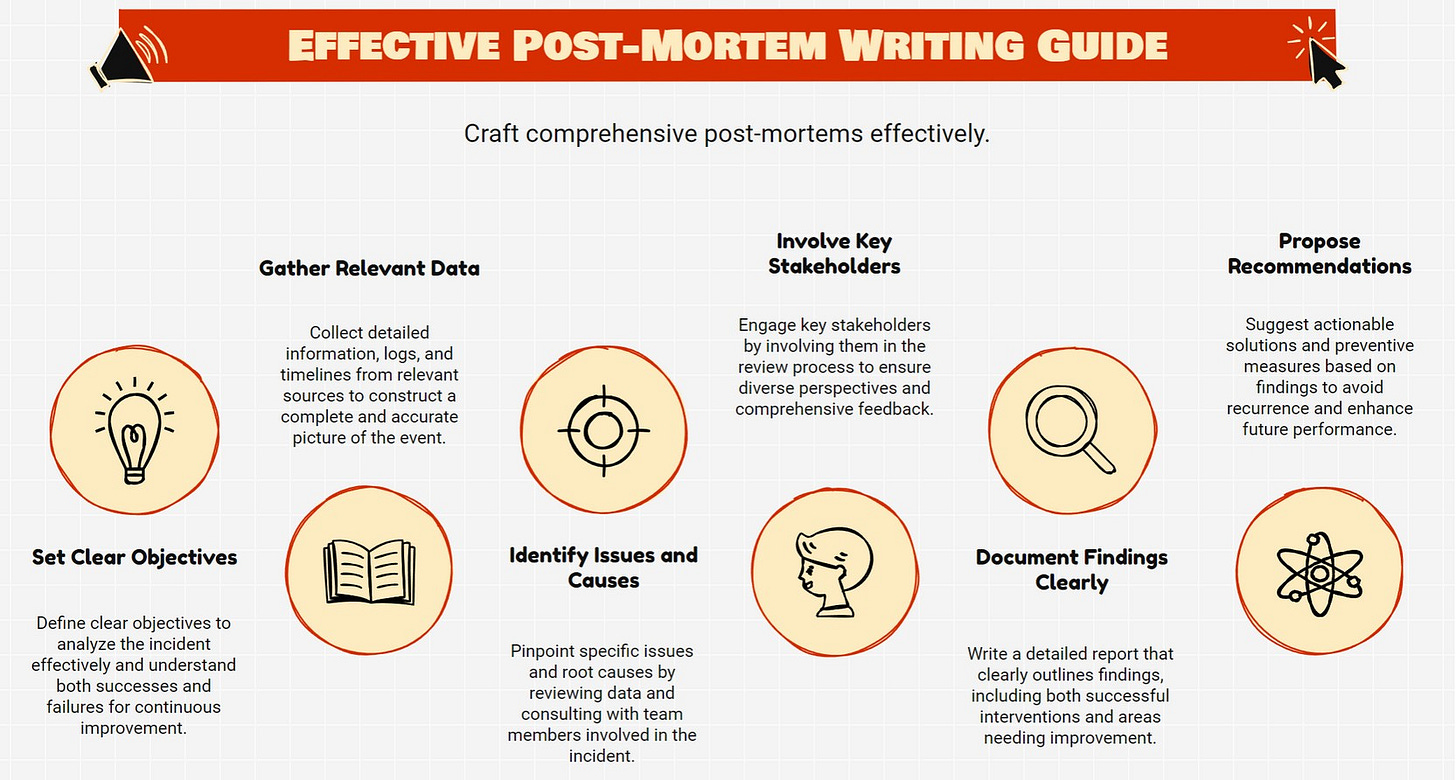

A few weeks ago, we explored the art of writing a great post-mortem, how it’s not just about documenting what went wrong, but uncovering the deeper lessons and actionable insights for the future. As if on cue, a significant event unfolded: OpenAI experienced a massive outage with ChatGPT, disrupting millions of users. True to form, they published a detailed post-mortem, providing a masterclass in transparency and resilience. Let’s unpack what happened, what they learned, and how they’re turning this setback into a stronger foundation for the future.

🚀 TL;DR

On December 11, 2024, OpenAI's services, including ChatGPT, experienced significant downtime. The root cause was a new telemetry service deployment that unintentionally overwhelmed the Kubernetes control plane, leading to cascading failures across critical systems. OpenAI's post-mortem provides insights into the incident and outlines steps to prevent future occurrences.

Here's what we will go through:

1️⃣ 🚀 What Happened

2️⃣ 🛑 Root Causes

3️⃣ 🤔 Lessons Learned

4️⃣ 🏗️ How OpenAI Plans to Prevent This

💡 More Insights, Quiz & Official Link (Paid)

🚀 What Happened

On December 11, 2024, between 3:16 PM and 7:38 PM PST, OpenAI's services, including ChatGPT, API, and Sora, experienced significant degradation or complete unavailability. The incident began with a deployment of a new telemetry service aimed at improving observability across OpenAI's Kubernetes clusters. However, this deployment inadvertently caused every node in each cluster to execute resource-intensive Kubernetes API operations, overwhelming the Kubernetes control plane and leading to service disruptions.

🛑 Root Causes

1️⃣ Overwhelmed Kubernetes Control Plane

The new telemetry service's configuration caused simultaneous, resource-intensive Kubernetes API operations across thousands of nodes. This unexpected load overwhelmed the Kubernetes API servers, taking down the control plane in most large clusters.

Why it Mattered: The Kubernetes control plane is essential for service discovery and DNS resolution. Its failure led to widespread service disruptions.

2️⃣ Insufficient Testing in Large Clusters

The change was tested in a staging cluster where no issues were observed. However, the impact was specific to clusters exceeding a certain size, and the testing environment did not replicate these conditions.

Why it Mattered: Without testing in environments that mirror production scale, certain failure modes remained undetected until deployment.

3️⃣ Delayed Visibility Due to DNS Caching

DNS caching on each node delayed the visibility of failures, allowing the rollout to continue before the full scope of the problem was understood. Once the DNS caches expired, services began failing due to their reliance on real-time DNS resolution.

Why it Mattered: The delayed failure visibility hindered immediate detection and mitigation efforts.

🤔 Lessons Learned

OpenAI's post-mortem shed light on several critical lessons that will guide future improvements:

1️⃣ Comprehensive Load Testing: Testing in staging environments failed to replicate production-scale traffic. OpenAI recognized the need for testing environments that mirror the size and complexity of their production clusters.

2️⃣ Enhanced Observability: A lack of real-time monitoring during the rollout obscured the problem until it was too late. Improved observability tools are needed to detect anomalies in real-time and provide immediate feedback during deployments.

3️⃣ Dependency Management: The tight coupling between the Kubernetes control plane and data plane created a critical vulnerability. Decoupling these components is essential to enhance resilience.

4️⃣ DNS Reliance: The reliance on Kubernetes DNS for service discovery introduced delays and cascading failures. This dependency must be re-evaluated to minimize risks.

5️⃣ Graceful Degradation: Systems must be designed to fail gracefully, ensuring that localized issues do not escalate into widespread outages.

🛠️ Action Items for Future Prevention

To prevent similar incidents, OpenAI outlined a set of targeted measures:

1️⃣ Robust Phased Rollouts

All infrastructure-related configuration changes will now follow a phased rollout process. This includes continuous monitoring to ensure both service workloads and Kubernetes clusters remain healthy. Failures will be detected early to limit impact.

2️⃣ Fault Injection Testing

OpenAI will implement fault injection testing to stress-test their systems. This includes:

Simulating bad changes to ensure detection and rollback mechanisms work as expected.

Running tests to validate the resilience of the Kubernetes data plane when disconnected from the control plane.

3️⃣ Emergency Kubernetes Control Plane Access

A “break-glass” mechanism will be introduced to guarantee engineers can access the Kubernetes API server during high-pressure scenarios.

4️⃣ Decoupling the Kubernetes Data Plane and Control Plane

The dependency between the data plane and control plane will be addressed by:

Investing in systems that allow the control plane to operate independently of mission-critical workloads.

Reducing load-bearing responsibilities for the control plane to improve overall stability.

5️⃣ Faster Recovery Mechanisms

OpenAI plans to enhance recovery capabilities with:

Improved caching to reduce dependency on real-time resource availability.

Dynamic rate limiters to manage resource demand during cluster restarts.

Regular disaster recovery exercises to ensure rapid and correct cluster replacement.

🔔 Too Long; Did Read

On December 11, 2024, a new telemetry service deployment inadvertently overwhelmed the Kubernetes control plane, triggering a cascade of failures that took down ChatGPT and related services. OpenAI’s post-mortem revealed gaps in testing, dependency management, and emergency access protocols. To prevent future outages, they are implementing phased rollouts, decoupling critical systems, enhancing fault tolerance, and improving recovery mechanisms. These measures aim to strengthen system resilience and ensure reliable service for all users.

💡 More Insights, Quiz & Official Link (Paid)

Keep reading with a 7-day free trial

Subscribe to Byte-Sized Design to keep reading this post and get 7 days of free access to the full post archives.