The Trillion-Event Platform: How Spotify Built a Data System That Doesn't Break

TL;DR

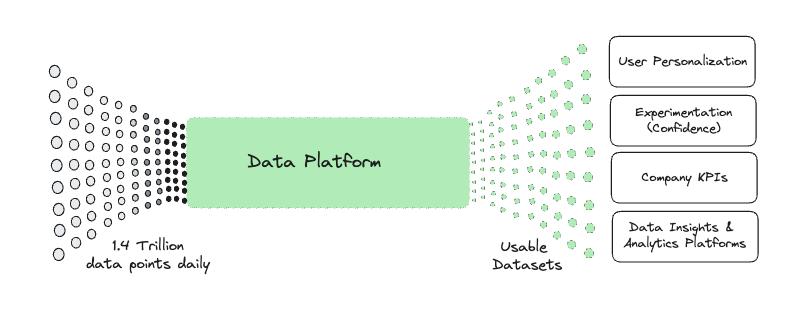

Spotify processes 1.4 trillion data points daily. Spotify grew from managing Europe’s largest Hadoop cluster to a 100+ engineer team running a full GCP-based platform. The key was when they stopped treating the data platform like infrastructure and started treating it like a product with real customers.

🎯 The Problem Space

Most companies hit the “we need a data platform” moment when their Slack is flooded with:

“Where’s that dataset again?”

“Why did this pipeline fail overnight?”

“Can someone explain why our numbers don’t match?”

Spotify hit all these triggers, but they also had a unique constraint: when your product is personalization, data isn’t a nice-to-have. It’s the entire business.

At scale, this meant:

1 trillion+ events per day flowing through event delivery

38,000+ scheduled pipelines running hourly and daily

1,800+ event types representing user interactions

Teams across payments, ML, experimentation, and product all needing reliable, fast access