GitHub’s Hard-Learned Scaling Lessons

Real-World Scaling Lessons: Caching, Query Tuning, and Smart Rollouts

🚀 TL;DR

Scaling a system like GitHub is a delicate balancing act. Even small changes can cause ripple effects across databases, caching layers, and API endpoints. GitHub's engineering team constantly monitors performance, tests optimizations, and fine-tunes configurations to stay ahead of scaling challenges.

Key improvements:

✅ Performance of slow queries in the Command Palette improved by 80-90%, reducing timeout rates drastically.

✅ Login failures dropped by 90%, and Redis remained stable under peak loads.

✅ Feature flag lookup overhead reduced by 50%, making evaluations nearly instant.

Here’s what we’ll cover:

1️⃣ The Tools GitHub Uses (Observability, query profiling, and experiment-driven improvements)

2️⃣ Performance Bottlenecks Fixed! (Slow queries, caching failures, excessive feature flag checks, and redundant code execution)

3️⃣ Lessons for Lead Engineers (Paid)

4️⃣ How You Can Apply These in Your Own Systems (Paid)

🔍 Background: How GitHub Monitors and Debugs Performance

GitHub handles millions of user requests daily, with a backend primarily powered by MySQL, Redis, and Rails. Keeping this system performant requires:

✅ Datadog for real-time metrics – Tracks request latency, query execution times, and cache hit/miss ratios.

✅ Splunk for event logging – Stores detailed logs for debugging slow endpoints and unexpected failures.

✅ Scientist for A/B testing – Runs production-safe experiments to compare new vs. old query optimizations.

✅ Flipper for gradual rollouts – Allows incremental feature releases to detect performance regressions before full deployment.

GitHub’s scaling strategy revolves around continuous monitoring, iterative improvements, and smart rollouts to ensure smooth performance at scale.

🛠️ Performance Bottlenecks GitHub Fixed

🚨 Slow Queries in the Command Palette

The Problem

GitHub’s Command Palette feature, which allows users to quickly search and navigate repositories, experienced increasing query timeouts. The culprit? A poorly optimized query structure:

org_repo_ids = Repository.where(owner: org).pluck(:id)

suggested_repo_ids = Contribution.where(user: viewer, repository_id: org_repo_ids).pluck(:repository_id)When an org had thousands of repositories, the IN(...) clause in the second query ballooned, leading to MySQL timeouts.

The Fix

GitHub engineers flipped the query order:

contributor_repo_ids = Contribution.where(user: viewer).pluck(:repository_id)

suggested_repo_ids = Repository.where(owner: org, id: contributor_repo_ids)✅ Result: Performance improved by 80-90%, reducing timeout rates drastically.

They confirmed this fix using a Scientist experiment, ensuring identical results with faster execution.

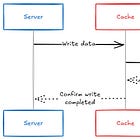

🚨 Caching Failures Took Down Authentication

The Problem

GitHub’s authentication system relied on Redis for caching user session tokens. Under extreme load, Redis started failing to evict old keys, leading to cache saturation and blocked login attempts.

The Fix

1️⃣ Implemented a second-level cache with MySQL as a fallback.

2️⃣ Introduced request coalescing to prevent redundant queries from overwhelming Redis.

3️⃣ Adjusted TTL values dynamically to prevent cache bloat.

✅ Result: Login failures dropped by 90%, and Redis remained stable under peak loads.

Learn more about caching in our previous post on caching strategies!

🚨 Feature Flag Overhead Slowed Down Requests

The Problem

GitHub uses Flipper for feature flagging, but retrieving flags per request was adding unexpected overhead. Some endpoints were making hundreds of flag lookups, increasing response times by 15-20%.

The Fix

Engineers: 1️⃣ Batched feature flag checks instead of querying them individually per request.

2️⃣ Cached flag results per request cycle instead of re-fetching them multiple times.

✅ Result: Reduced overhead by 50%, making feature flag evaluations nearly instant.

🚨 Removing Unused Code for Faster Requests

The Problem

While GitHub’s tooling surfaces problem areas, getting ahead of performance issues is key. One team analyzed their busiest request endpoints and found that a simple redirect was consistently one of the slowest actions, frequently hitting timeout thresholds.

The Fix

1️⃣ Analyzed logs in Splunk to identify the top 10 controller/action pairs with the highest latency.

2️⃣ Created a Datadog dashboard to track request volume, average/P99 latency, and max request latency.

3️⃣ Found that an unnecessary access check was slowing down the redirect response.

4️⃣ Used Flipper to enable a new, optimized behavior that skipped unnecessary access checks for these requests.

5️⃣ Monitored performance improvements in real-time before rolling out the change to all users.

✅ Result: Reduced max request latency, improved P75/P99 response times, and prevented timeouts. The same approach was applied to other controllers for wider performance gains.