📚 Give us your thoughts!

Before we jump into today’s topic we would love to know what kind of posts you look forward to the most from our publication. Cast your vote! (And if it’s something else feel free to leave a comment!)

We always appreciate the feedback, now let’s jump into rate limiting!

📚 tldr;

Rate limiting is the bouncer of your application, ensuring only a select few (or many) get to party at a time. It helps prevent resource starvation, saves costs, and stops your servers from throwing up their virtual hands in defeat. Let’s dive into why you should be best friends with rate limiting and how to implement it like a pro!

📈 Welcome to the Rate Limiting Club

Picture this: you’ve got a popular new API, and users are hammering at your doors like it’s a Black Friday sale. It’s exciting, right? Until you realize your servers are about to melt under the weight of those requests. Time to look into rate limiting—the unsung hero of modern applications, ensuring that not just anyone can waltz in and make your system collapse like a house of cards.

🚦 What Is Rate Limiting, Anyway?

Rate limiting is the pattern that dictates how often a user or service can access a resource. Think of it as the polite traffic cop who waves you through the intersection—“One at a time, folks!” When users exceed the set threshold, their requests get throttled or blocked. Here are a few examples to illustrate:

Three logins per second per IP address: We know you’re excited, but let’s not have a stampede of logins crashing the server like a herd of over-caffeinated squirrels!

Ten profile updates per day: Seriously, do you really need to change your bio five times in a single day? Let’s save some updates for tomorrow—your followers will survive!

Twenty password attempts per hour per device: Remember, it’s called “forgot your password,” not “let’s see how many times I can guess it.” Give those fingers a break!

One data export per week: We get it, you love your data! But let’s not turn our server into a data buffet—one export a week is plenty for even the most data-hungry users!

☀️ The Bright Side of Rate Limiting

You might be wondering, “Why should I care about limiting my users?” Well, here’s why you should roll out the red carpet for rate limiters:

Lower those Costs: Overloaded resources lead to unexpected bills. For those using third-party APIs, limiting calls is a financial lifesaver. It’s like setting a budget for your online shopping—nobody wants to overspend.

Keep Your Servers Happy: A rate limiter ensures your servers don’t implode from too many requests. Think of it as a protective barrier that rejects excess requests before they even reach the main event.

Enhanced Security: Rate limiting helps fend off malicious bots and brute-force attacks, keeping data and users safer.

📍 Where to Use Rate Limiting

Rate limiting can be your best friend in various scenarios, helping you manage resources without breaking a sweat:

APIs: Control incoming requests to prevent API abuse, ensuring fair access and maintaining performance for all users. Throttle attempts to guard against brute-force attacks.

Data-Intensive Operations: Limit high-cost operations, like database queries or file uploads, to keep resources in check and avoid system strain.

Third-Party Integrations: Regulate requests to external services, protecting against penalties for overuse and ensuring compliance with API quotas.

Microservices: Manage inter-service communication to prevent any one service from overwhelming another, keeping the system balanced.

🔒 How Can You Implement Rate Limiting?

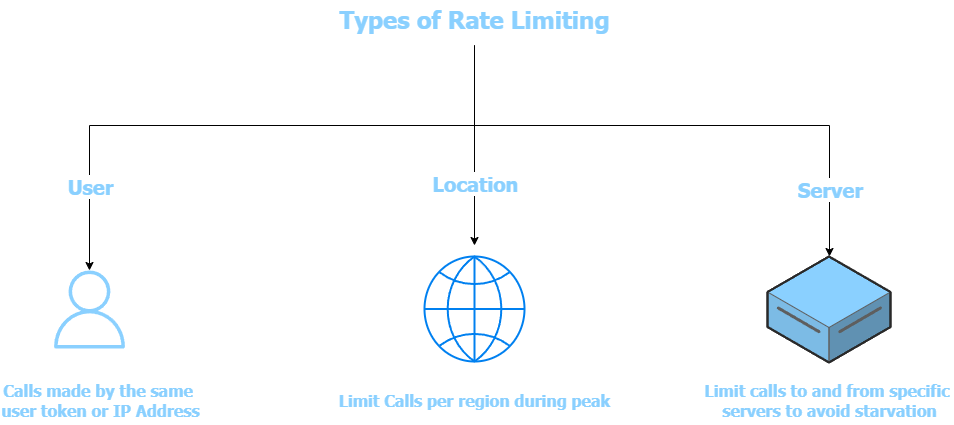

Rate limiting can be implemented at the client level, application level, infrastructure level, or anywhere in between. Here are a few ways to control inbound traffic to your API service:

By user: Track calls made by a user through an API key, access token, or IP address.

By geography: Adjust rate limits based on geographic regions, such as decreasing limits during peak hours for specific locations.

By server: If you have multiple servers managing different API calls, consider implementing stricter rate limits for access to more resource-intensive services.

You can use any one of these methods—or even a combination of them—to effectively manage your API traffic. In an future newsletter post, we will explore the different types of rate limiters—client, server, and middle tier—along with the algorithms that can effectively be implemented for each.

🎉 Too Long Did Read.

In a nutshell, rate limiting is a powerful mechanism to keep your systems running smoothly while ensuring users have a fair experience. Whether you’re a seasoned developer or just dipping your toes into the tech pool, understanding how to implement rate limiting can save you from plenty of headaches down the line. So, treat it like your best friend—because it certainly has your back!

And remember, just because your API can handle the traffic doesn’t mean it should. Be kind, set limits, and let your users enjoy a seamless experience without the chaos.

🗣️ Let us know what you think!

Get featured in the newsletter! Comment your feedback on this post, or tag Byte-Sized Design on LinkedIn, and Substack Notes.

If you enjoy reading this post, feel free to share it with friends! Or feel free to click the button on this post so more people can discover it on Substack

🎉 SPONSOR US 🎉

Promote your product or service to over 30,000 tech professionals! Our newsletter connects you directly with software engineers in the industry building new things every day!

Secure Your Spot Now! Don’t miss your chance to reach this key audience. Email us at bytesizeddesigninfo@gmail.com to reserve your space today!

Official Article and Resources!

The official article is for paid subscribers.

Paid subscribers support and keep this newsletter maintained for the 30,000+ readers.

Keep reading with a 7-day free trial

Subscribe to Byte-Sized Design to keep reading this post and get 7 days of free access to the full post archives.